1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 import os from tensorflow.keras import layers from tensorflow.keras import Model !wget --no-check-certificate \ https://storage.googleapis.com/mledu-datasets/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5 \ -O /tmp/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5 from tensorflow.keras.applications.inception_v3 import InceptionV3 local_weights_file = '/tmp/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5' pre_trained_model = InceptionV3(input_shape = (150, 150, 3), include_top = False, weights = None) pre_trained_model.load_weights(local_weights_file) for layer in pre_trained_model.layers: layer.trainable = False # pre_trained_model.summary() last_layer = pre_trained_model.get_layer('mixed7') print('last layer output shape: ', last_layer.output_shape) last_output = last_layer.output

--2019-02-13 14:04:55-- https://storage.googleapis.com/mledu-datasets/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5

Resolving storage.googleapis.com... 2607:f8b0:4003:c05::80, 64.233.168.128

Connecting to storage.googleapis.com|2607:f8b0:4003:c05::80|:443... connected.

WARNING: cannot verify storage.googleapis.com's certificate, issued by 'CN=Google Internet Authority G3,O=Google Trust Services,C=US':

Unable to locally verify the issuer's authority.

HTTP request sent, awaiting response... 200 OK

Length: 87910968 (84M) [application/x-hdf]

Saving to: '/tmp/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5'

/tmp/inception_v3_w 100%[=====================>] 83.84M 75.6MB/s in 1.1s

2019-02-13 14:04:56 (75.6 MB/s) - '/tmp/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5' saved [87910968/87910968]

('last layer output shape: ', (None, 7, 7, 768))1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 from tensorflow.keras.optimizers import RMSprop # Flatten the output layer to 1 dimension x = layers.Flatten()(last_output) # Add a fully connected layer with 1,024 hidden units and ReLU activation x = layers.Dense(1024, activation='relu')(x) # Add a dropout rate of 0.2 x = layers.Dropout(0.2)(x) # Add a final sigmoid layer for classification x = layers.Dense (1, activation='sigmoid')(x) model = Model( pre_trained_model.input, x) model.compile(optimizer = RMSprop(lr=0.0001), loss = 'binary_crossentropy', metrics = ['acc'])

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 !wget --no-check-certificate \ https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip \ -O /tmp/cats_and_dogs_filtered.zip from tensorflow.keras.preprocessing.image import ImageDataGenerator import os import zipfile local_zip = '//tmp/cats_and_dogs_filtered.zip' zip_ref = zipfile.ZipFile(local_zip, 'r') zip_ref.extractall('/tmp') zip_ref.close() # Define our example directories and files base_dir = '/tmp/cats_and_dogs_filtered' train_dir = os.path.join( base_dir, 'train') validation_dir = os.path.join( base_dir, 'validation') train_cats_dir = os.path.join(train_dir, 'cats') # Directory with our training cat pictures train_dogs_dir = os.path.join(train_dir, 'dogs') # Directory with our training dog pictures validation_cats_dir = os.path.join(validation_dir, 'cats') # Directory with our validation cat pictures validation_dogs_dir = os.path.join(validation_dir, 'dogs')# Directory with our validation dog pictures train_cat_fnames = os.listdir(train_cats_dir) train_dog_fnames = os.listdir(train_dogs_dir) # Add our data-augmentation parameters to ImageDataGenerator train_datagen = ImageDataGenerator(rescale = 1./255., rotation_range = 40, width_shift_range = 0.2, height_shift_range = 0.2, shear_range = 0.2, zoom_range = 0.2, horizontal_flip = True) # Note that the validation data should not be augmented! test_datagen = ImageDataGenerator( rescale = 1.0/255. ) # Flow training images in batches of 20 using train_datagen generator train_generator = train_datagen.flow_from_directory(train_dir, batch_size = 20, class_mode = 'binary', target_size = (150, 150)) # Flow validation images in batches of 20 using test_datagen generator validation_generator = test_datagen.flow_from_directory( validation_dir, batch_size = 20, class_mode = 'binary', target_size = (150, 150))

--2019-02-13 14:05:24-- https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip

Resolving storage.googleapis.com... 2607:f8b0:4003:c0a::80, 173.194.223.128

Connecting to storage.googleapis.com|2607:f8b0:4003:c0a::80|:443... connected.

WARNING: cannot verify storage.googleapis.com's certificate, issued by 'CN=Google Internet Authority G3,O=Google Trust Services,C=US':

Unable to locally verify the issuer's authority.

HTTP request sent, awaiting response... 200 OK

Length: 68606236 (65M) [application/zip]

Saving to: '/tmp/cats_and_dogs_filtered.zip'

/tmp/cats_and_dogs_ 100%[=====================>] 65.43M 168MB/s in 0.4s

2019-02-13 14:05:24 (168 MB/s) - '/tmp/cats_and_dogs_filtered.zip' saved [68606236/68606236]

Found 2000 images belonging to 2 classes.

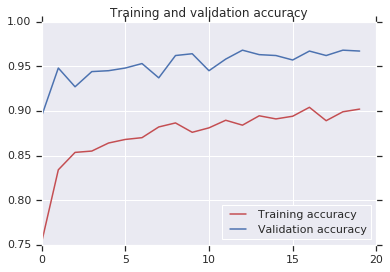

Found 1000 images belonging to 2 classes.1 2 3 4 5 6 7 history = model.fit_generator( train_generator, validation_data = validation_generator, steps_per_epoch = 100, epochs = 20, validation_steps = 50, verbose = 2)

Epoch 1/20

100/100 - 17s - loss: 0.5283 - acc: 0.7525 - val_loss: 0.3843 - val_acc: 0.8940

Epoch 2/20

100/100 - 14s - loss: 0.3678 - acc: 0.8340 - val_loss: 0.2040 - val_acc: 0.9480

Epoch 3/20

100/100 - 15s - loss: 0.3352 - acc: 0.8535 - val_loss: 0.3987 - val_acc: 0.9270

Epoch 4/20

100/100 - 15s - loss: 0.3432 - acc: 0.8550 - val_loss: 0.2987 - val_acc: 0.9440

Epoch 5/20

100/100 - 15s - loss: 0.3391 - acc: 0.8640 - val_loss: 0.3390 - val_acc: 0.9450

Epoch 6/20

100/100 - 14s - loss: 0.3135 - acc: 0.8680 - val_loss: 0.3465 - val_acc: 0.9480

Epoch 7/20

100/100 - 14s - loss: 0.3113 - acc: 0.8700 - val_loss: 0.3115 - val_acc: 0.9530

Epoch 8/20

100/100 - 15s - loss: 0.2901 - acc: 0.8820 - val_loss: 0.5042 - val_acc: 0.9370

Epoch 9/20

100/100 - 15s - loss: 0.2912 - acc: 0.8865 - val_loss: 0.3065 - val_acc: 0.9620

Epoch 10/20

100/100 - 15s - loss: 0.2944 - acc: 0.8760 - val_loss: 0.2641 - val_acc: 0.9640

Epoch 11/20

100/100 - 14s - loss: 0.2831 - acc: 0.8810 - val_loss: 0.4515 - val_acc: 0.9450

Epoch 12/20

100/100 - 15s - loss: 0.2682 - acc: 0.8895 - val_loss: 0.3231 - val_acc: 0.9580

Epoch 13/20

100/100 - 15s - loss: 0.2748 - acc: 0.8840 - val_loss: 0.2427 - val_acc: 0.9680

Epoch 14/20

100/100 - 15s - loss: 0.2669 - acc: 0.8945 - val_loss: 0.3075 - val_acc: 0.9630

Epoch 15/20

100/100 - 15s - loss: 0.2732 - acc: 0.8910 - val_loss: 0.2629 - val_acc: 0.9620

Epoch 16/20

100/100 - 14s - loss: 0.2634 - acc: 0.8940 - val_loss: 0.3864 - val_acc: 0.9570

Epoch 17/20

100/100 - 14s - loss: 0.2473 - acc: 0.9040 - val_loss: 0.2648 - val_acc: 0.9670

Epoch 18/20

100/100 - 15s - loss: 0.2767 - acc: 0.8890 - val_loss: 0.2519 - val_acc: 0.9620

Epoch 19/20

100/100 - 17s - loss: 0.2660 - acc: 0.8990 - val_loss: 0.2495 - val_acc: 0.9680

Epoch 20/20

100/100 - 15s - loss: 0.2535 - acc: 0.9020 - val_loss: 0.2682 - val_acc: 0.96701 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 import matplotlib.pyplot as plt acc = history.history['acc'] val_acc = history.history['val_acc'] loss = history.history['loss'] val_loss = history.history['val_loss'] epochs = range(len(acc)) plt.plot(epochs, acc, 'r', label='Training accuracy') plt.plot(epochs, val_acc, 'b', label='Validation accuracy') plt.title('Training and validation accuracy') plt.legend(loc=0) plt.figure() plt.show()

png

<matplotlib.figure.Figure at 0x7ff19c530b90>