Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

- Category: Article

- Created: February 12, 2022 3:57 PM

- Status: Open

- URL: https://openaccess.thecvf.com/content_ICCV_2017/papers/Zhu_Unpaired_Image-To-Image_Translation_ICCV_2017_paper.pdf

- Updated: February 15, 2022 3:03 PM

Highlights

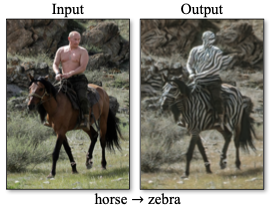

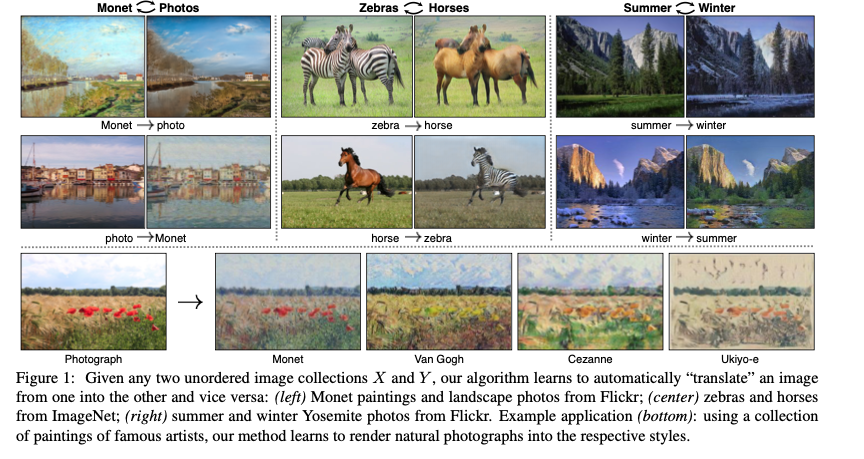

- We present an approach for learning to translate an image from a source domain \(X\) to a target domain \(Y\) in the absence of paired examples.

- Our goal is to learn a mapping \(G: X \rightarrow Y\) such that the distribution of images from \(G(X)\) is indistinguishable from the distribution \(Y\) using an adversarial loss. Because this mapping is highly under-constrained, we couple it with an inverse mapping \(F: Y \rightarrow X\) and introduce a cycle consistency loss to enforce \(F(G(X)) \approx X\) (and vice versa).

Methods

Adversarial Loss

We apply adversarial losses to both mapping functions. For the mapping function \(G: X \rightarrow Y\) and its dis- criminator \(D_Y\) , we express the objective as:

\[ \begin{aligned}\mathcal{L}_{\mathrm{GAN}}\left(G, D_{Y}, X, Y\right) &=\mathbb{E}_{y \sim p_{\mathrm{dau}}(y)}\left[\log D_{Y}(y)\right] \\&+\mathbb{E}_{x \sim p_{\operatorname{man}}(x)}\left[\log \left(1-D_{Y}(G(x))\right]\right.\end{aligned} \]

where \(G\) tries to generate images \(G(x)\) that look similar to images from domain \(Y\) , while \(D_Y\) aims to distinguish between translated samples \(G(x)\) and real samples \(y\). \(G\) aims to minimize this objective against an adversary \(G\) that tries to maximize it. We introduce a similar adversarial loss for the mapping function \(F: Y \rightarrow X\).

Cycle Consistency Loss

Adversarial training can, in theory, learn mappings \(G\) and \(F\) that produce outputs identically distributed as target domains \(Y\) and \(X\) respectively. However, with large enough capacity, a network can map the same set of input images to any random permutation of images in the target domain, where any of the learned mappings can induce an output distribution that matches the target distribution. Thus, adversarial losses alone cannot guarantee that the learned function can map an individual input \(x_i\) to a desired output \(y_i\).

To further reduce the space of possible mapping functions, we argue that the learned mapping functions should be cycle-consistent: for each image \(x\) from domain \(X\), the image translation cycle should be able to bring \(x\) back to the original image.

\[ x \rightarrow G(x) \rightarrow F(G(x)) \approx x \]

\[ \begin{aligned}\mathcal{L}_{\mathrm{cyc}}(G, F) &=\mathbb{E}_{x \sim p_{\text {dsat }}(x)}\left[\|F(G(x))-x\|_{1}\right] \\&+\mathbb{E}_{y \sim p_{\text {daca }}(y)}\left[\|G(F(y))-y\|_{1}\right]\end{aligned} \]

Full Objective

\[ \begin{aligned}\mathcal{L}\left(G, F, D_{X}, D_{Y}\right) &=\mathcal{L}_{\mathrm{GAN}}\left(G, D_{Y}, X, Y\right) \\&+\mathcal{L}_{\mathrm{GAN}}\left(F, D_{X}, Y, X\right) \\&+\lambda \mathcal{L}_{\mathrm{cyc}}(G, F)\end{aligned} \]

where λ controls the relative importance of the two objectives. We aim to solve:

\[ G^{*}, F^{*}=\arg \min _{G, F} \max _{D_{x}, D_{Y}} \mathcal{L}\left(G, F, D_{X}, D_{Y}\right) \]

Limitation

On translation tasks that involve color and texture changes, as many of those reported above, the method often succeeds.

We have also explored tasks that require geometric changes, with little success.Handling more varied and extreme transformations, especially geo- metric changes, is an important problem for future work.

Some failure cases are caused by the distribution characteristics of the training datasets.