Practical Guide for Competition

Define your goals.

What you can get out of your participation? 1. To learn more about an interesting problem 2. To get acquainted with new software tools 3. To hunt for a medal

Working with ideas

- Organize ideas in some structure

- Select the most important and promising ideas

- Try to understand the reasons why something does/doesn’t work

Initial pipeline

- Get familiar with problem domain

- Start with simple (or even primitive) solution

- Debug full pipeline − From reading data to writing submission file

- “From simple to complex” − I prefer to start with Random Forest rather than Gradient Boosted Decision Trees

data loading

- Do basic preprocessing and convert csv/txt files into hdf5/npy for much faster loading

- Do not forget that by default data is stored in 64-bit arrays, most of the times you can safely downcast it to 32-bits

- Large datasets can be processed in chunks

Performance evaluation

- Extensive validation is not always needed

- Start with fastest models - such as LightGBM

Everything is a hyperparameter

Sort all parameters by these principles: 1. Importance 2. Feasibility 3. Understanding

Note: changing one parameter can affect the whole pipeline

tricks

- Fast and dirty always better

- Don’t pay too much attention to code quality

- Keep things simple: save only important things

- If you feel uncomfortable with given computational resources

- rent a larger server

- Use good variable names

- If your code is hard to read — you definitely will have problems soon or later

- Keep your research reproducible

- Fix random seed − Write down exactly how any features were generated − Use Version Control Systems (VCS, for example, git)

- Reuse code − Especially important to use same code for train and test stages

- Read papers

- For example, how to optimize AUC

- Read forums and examine kernels first

- Code organization

- keeping it clean

- macros

- test/val

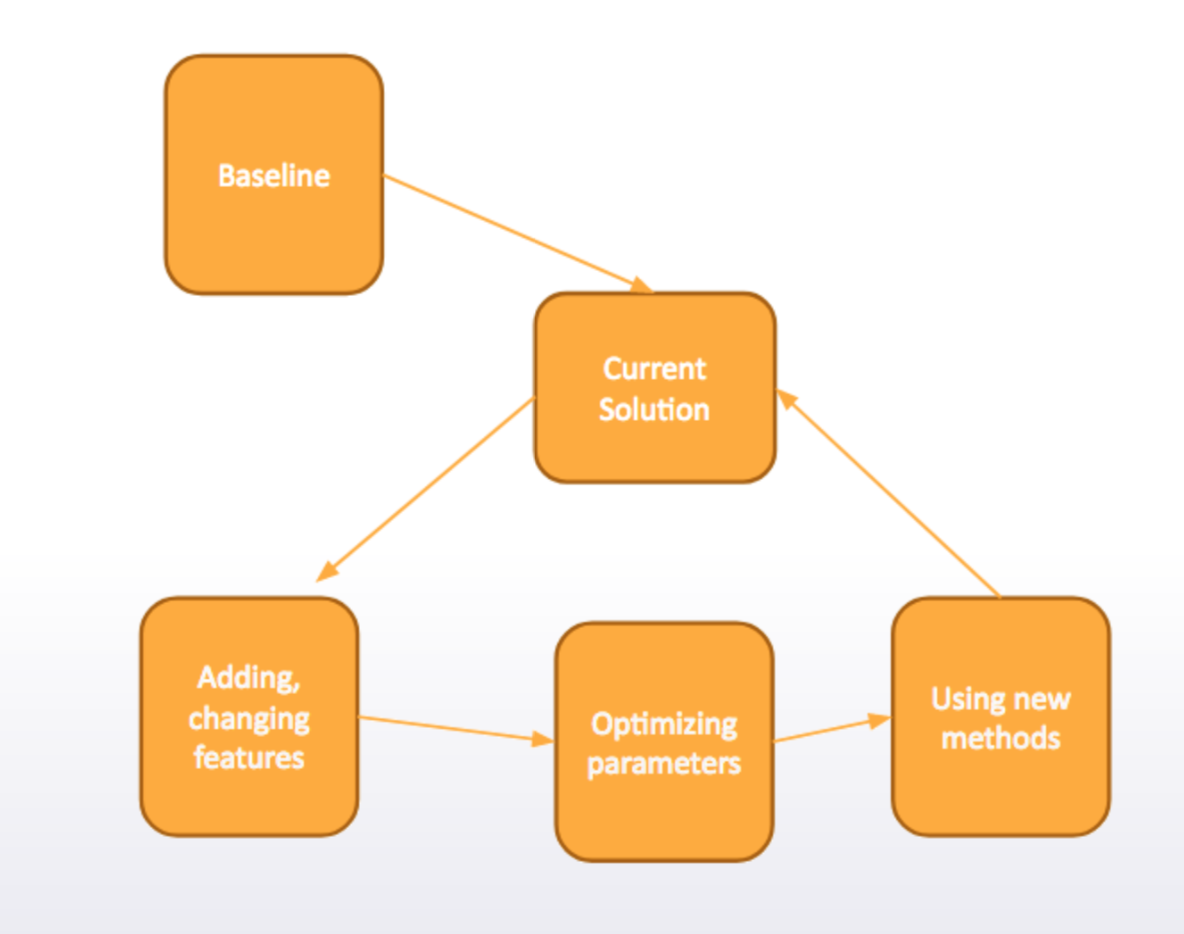

Pipeline detail

| Procedure | days |

|---|---|

| Understand the problem | 1 ~ 2 days |

| Exploratory data analysis | 1 ~ 2 days |

| Define cv strategy | 1 day |

| Feature Engineering | until last 3 ~ 4 days |

| Modeling | Until last 3 ~ 4 days |

| Ensembling | last 3 ~ 4 days |

Understand broadly the problem

- type of problem

- How big is the dataset

- What is the metric

- Previous code revelant

- Hardware needed (cpu, gpu ....)

- Software needed (TF, Sklearn, xgboost, lightgBM)

EDA

see the blog Exploratory data analysis

Define cv strategy

- This setp is critical

- Is time is important? Time-based validation

- Different entities than the train. StratifiedKFold Validation

- Is it completely random? Random validation

- Combination of all the above

- Use the leader board to test

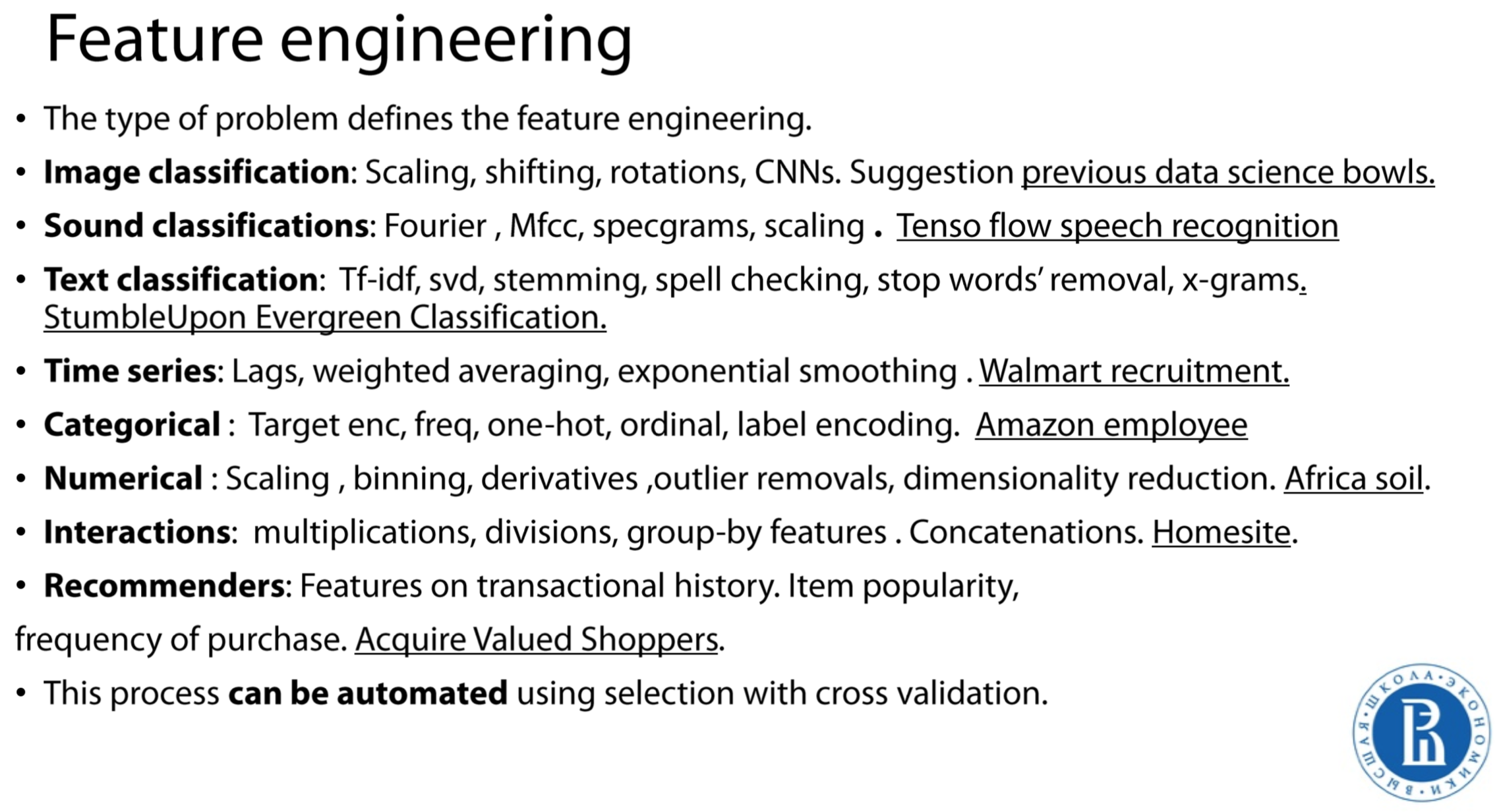

Feature Engineering

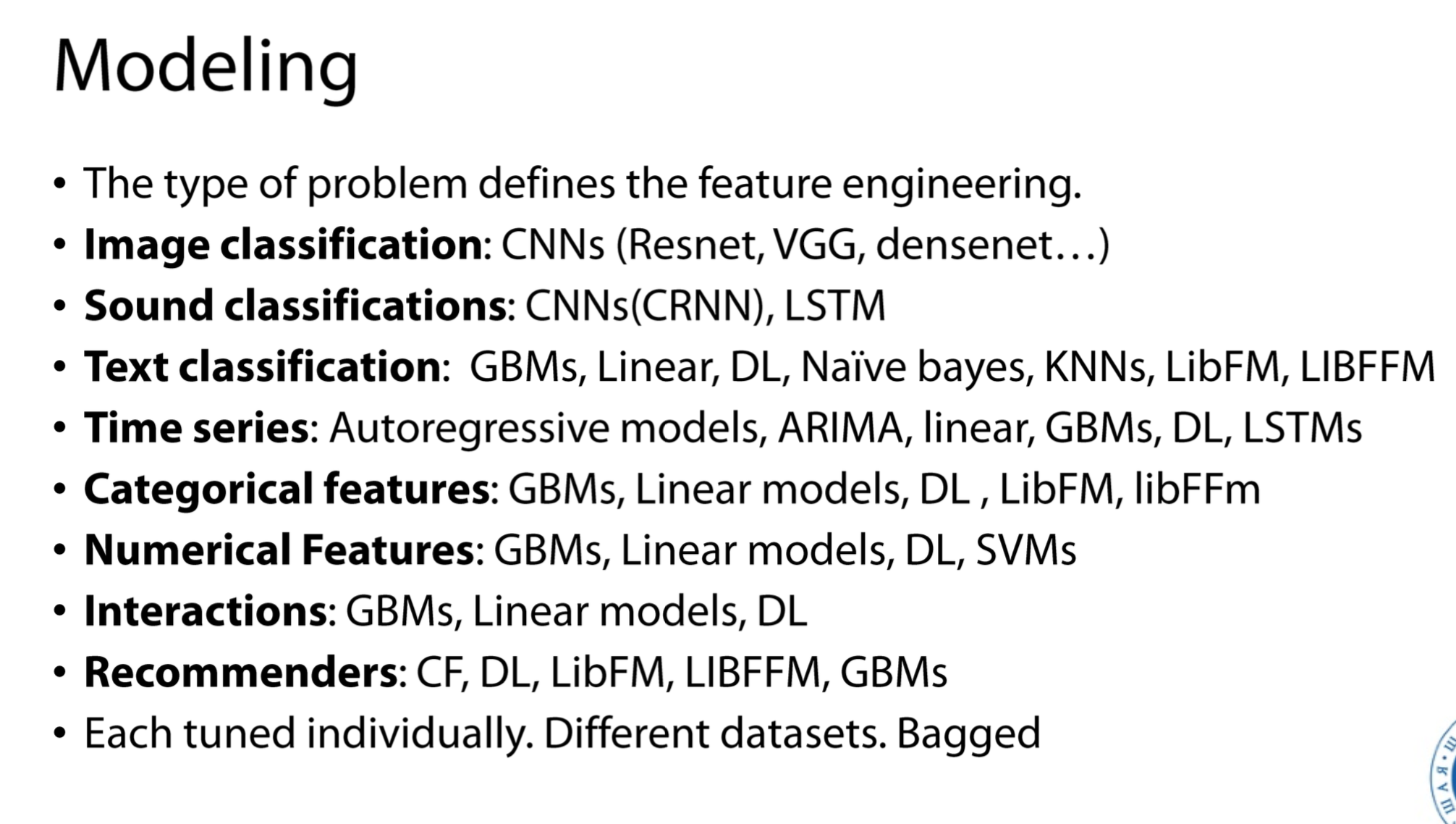

Modeling

Ensembling