逻辑回归的输出是什么?

\[h_{\theta} = P(y = 1| x;\theta)\]

也就是给定x和在参数theta下,y=1(default)的概率

逻辑回归的输入是什么?

\[ y_{\theta} = \beta_{0} + \beta_{1}x_{1} + \beta_{2}x_{2} .... \]

也就是线性回归

- 所以Logistic Regression的决策边界是线性回归

- 逻辑回归的本质还是线性回归,也会看到有一些文章说在特征到结果的映射中多加了一层函数映射

我们用什么把输入与输出联系起来?

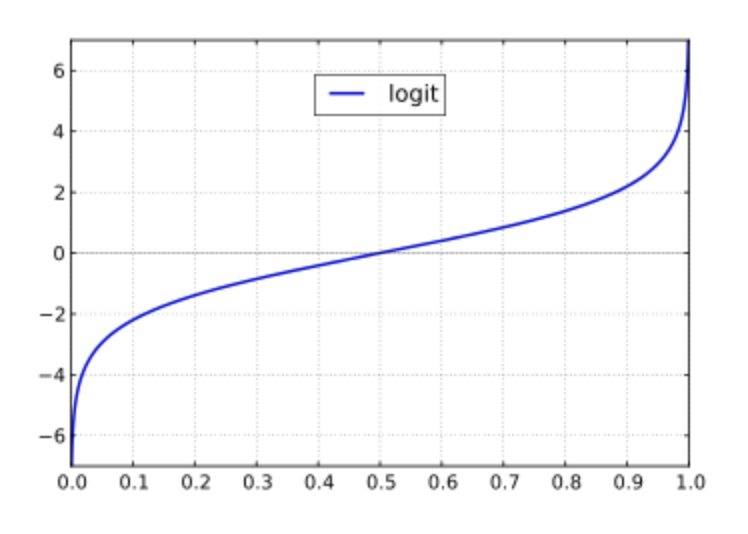

Logit function:

\[Logit(p) = ln(odds) = ln(\frac{p}{1-p})\]

定义域为[0,1], 值域为R.

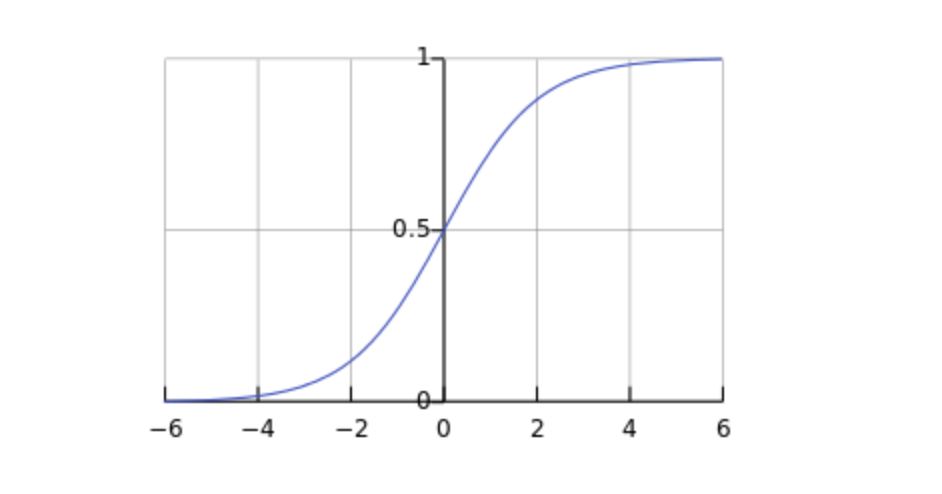

将上述方程取反函数,得到sigmoid函数,定义域为R, y为P, 值域为[0,1].

\[Sigmoid(\alpha) = logit^{-1}(\alpha) = \frac{1}{1+e^{-\alpha}}\]

Maximum likelihood estimation 来估计参数theta的值

\[L(\theta) = \prod_{i:y_{i}=1}p(x_{i})\prod_{i^{\prime}:y_{i^{\prime}}=0}(1-p(x_{i^{\prime}}))\]

- Get coefficients that maximizes the likelihood, then use them for predictions

- Maximizing the likelihood function is equivalent to minimizing the cost function \(J(\theta)\)

\[J(\theta) = - \sum_{i=1}^{n}[y_{i}log(P(y_{i} = 1 | x)) + (1 - y_{i})log(1 - P(y_{i}=1|x))]\]